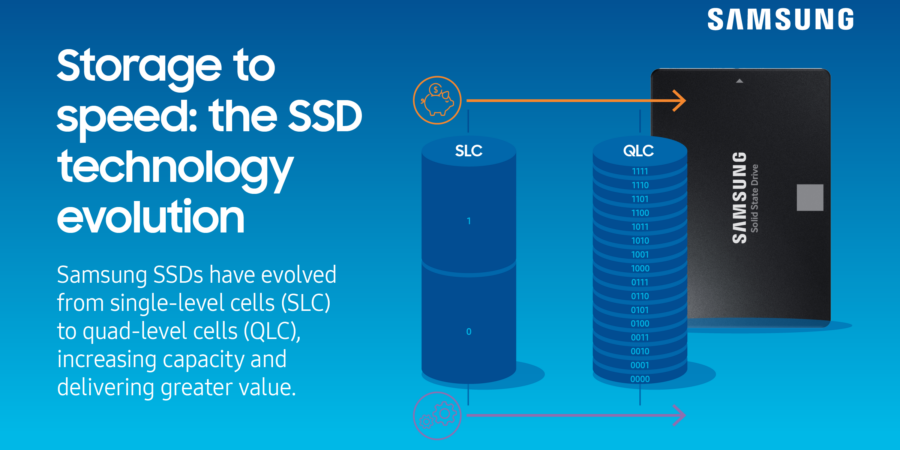

When solid state drives (SSDs) were invented in the 1950s, the effect was transformative — smaller, faster drives delivered huge increases in performance, while also delivering greater reliability as compared to traditional hard disk drives (HDDs).

Today’s SSDs deliver far greater speed and storage capacity than those first-generation drives, accelerating business, gaming, healthcare and more. By looking at how the technology has evolved, you can also get a clear view of what the future will hold for SSD components, benefits and applications.

What makes SSD storage faster?

Solid state drives (SSDs) use non-volatile flash memory cells to store data. Unlike spinning-platter hard drives, SSDs require no moving parts. Instead, information is stored directly on these flash memory chips, making SSDs much faster at reading and writing data.

Here’s how this works: a mesh of electrical cells in a “NAND” — a type of non-volatile flash memory — stores the data for near-instant recall. “Non-volatile” means that NAND flash memory doesn’t need constant power to retain the data. This makes SSDs useful for long-term storage, but without spinning-disk HDDs’ potential for mechanical failures.

There’s another reason why SSDs are faster than traditional HDDs. HDDs have long included a bit of random access memory (RAM) called a “cache” within the drive’s case, typically anywhere from 8MB to 256MB. The cache is designed to increase the drive’s perceived read/write performance by storing frequently requested information. If data isn’t in the cache, it has to be read from the slower spinning disk — these small caches often don’t result in noticeably faster speeds.

SSD caches, on the other hand, can be in the gigabytes. They buffer requests to improve the longevity of the drive and serve short bursts of read/write requests faster than the regular drive memory allows. These caches are essential in enterprise storage applications, including heavily used file servers and database servers.

Additionally, since there are no spinning platters or actuator arms, there is no need to wait for the physical parts to ramp up to operating speed. This feature eliminates a performance hit that hard drives cannot escape. What’s more, new interfaces have made it possible for data transfer rates to far exceed the capability of traditional spinning media.

Applications for SSDs

Because of their clear advantages in speed and reliability, SSDs are popular for the following use cases:

- To host both the database engine and the database itself for quick access.

- As a “hot” tier in a stratified network storage archive, where frequently accessed data can be retrieved and rewritten very quickly.

- In situations where reliability is absolutely necessary, such as supporting 24/7 enterprise apps.

- In business settings where both operating system and applications must load quickly and data is constantly being read and written.

How to choose the right SSD for your needs

Over the past few years, there have been several changes to SSDs. One of the most recent updates is the use of the PCIe® interface (a low-latency computer expansion bus also known as a peripheral component interconnect express) instead of over other interface technologies, such as serial advanced technology attachment (SATA).

PCIe SSDs interface with a system via its PCIe slot — the same slot used for high-speed video cards, memory and chips. PCIe 1.0 launched in 2003, with a transfer rate of just 2.5 gigatransfer per second (GT/s) and a total bandwidth of 8 Gbps. GT/s measures the number of bits per second that the bus can move or transfer.

Current high-performance SSDs use the PCIe 4.0 specification, which features a 16 GT/s rate and bandwidth of 64 Gbps. PCIe is now being paired with the non-volatile memory host controller interface specification (NVMe®), a communications protocol for high-speed storage systems that runs on top of PCIe.

This development means that blazing-fast speeds and high reliability are available for the data center, ready to replace older, inefficient HDDs. The Samsung PM9A3 features a U.2 form factor for maximum compatibility as well as a PCIe 4.0 NVMe interface for speed — including sequential read speeds of up to 6,900 MB/s. Plus the PM9A3 includes AES 256-bit encryption to protect your sensitive data.

For data centers invested in SATA technology, there’s an SSD alternative to spinning platters. The Samsung PM893 offers SSD reliability, the same level of encryption as the PM9A3, and a sequential read speed of up to 550 MB/s — which is 3-4x faster than a typical HDD

Desktop and laptop users can take advantage of the latest SSD capabilities. Samsung’s PCIe 4.0-compatible 990 PRO delivers read speeds up to 7,000MBps, making it twice as fast as PCIe 3.0 SSDs and 12.7 times faster than some SATA SSDs.

What does the future hold?

In the short term, capacities will continue to ramp up, while the cost per GB for SSDs will continue to decrease. New form factors with more parallel data transmission lanes between storage and the host bus will emerge to increase the speed and quality of the NAND storage medium.

The physical layer of cells that holds the blocks and pages will improve, offering better reliability and performance. Chip density will continue to grow at an astounding rate, allowing SSDs to fit more capacity in the same space. Most exciting, PCIe 5.0 drives are on the way, delivering twice the read/write speeds of PCIe 4.0 drives. This next generation of SSDs will soon be commonplace, enabling users to accomplish more in less time — driving new levels of efficiency, productivity and innovation.

Learn more about how to improve your storage planning and evaluation processes with this free guide.